ANALYSIS OF RALF VANDEBERGH'S NANOSAIL IMAGES

As a "textbook case", this analysis of several images of Nanosail taken in April 2011 and published on different forums and groups, has been performed with regard to the associated claims saying that the images show the real colors, size and shape of the satellite. The general conclusion of this seven-steps analysis is available at the end of this page.

The preliminary lecture of this page about the reliability of images may be useful.

PART 1: SIZE

The size of Nanosail is 3 metres (10 feet). Seen from 740 km (460 miles) as in the image above, this corresponds to an apparent diameter less than one arc second (about 0.8 arcsec). This is less than the size of the Airy disc, whose diameter is 1.1 arcsec for a 10” telescope. Thus, in the comparison of size with the Airy disc shown above, the size of Nanosail in the image is not mainly related to its real size and shape but caused by atmospheric turbulence or other spreading causes (manual tracking, defocus etc.).

The image above has been taken with a 10" telescope at F/4.8 (FL = 1200mm) with an eyepiece of 15mm giving a magnification of 80 (1200 divided by 15). Behind the eyepiece is a JVC GR-DX27E camcorder whose maximum zoom corresponds to a FL of 31.2mm. Therefore the final focal length is maximum 2500mm (31.2 multiplied by 80). With pixels of 3.2 microns, this gives a sampling of 0.26 arcsec per pixel. Nanosail being no larger than 0.8 arcsec, this corresponds to a size of 3 pixels on the sensor. The "raw" image shown above covering more than 16 pixels, the conclusion is the same as above. The same analysis can be done on this other image, which shows clear symptoms of atmospheric and/or optical color dispersion (see below for explanation):

Note that from the raw image, the images shown above on the right have been enlarged 16 times. This is a considerable value and no planetary imager never enlarges his images by such a factor (maximum 2). This way of displaying the image has no advantage but suggesting that the apparent size of the satellite is much larger that in reality (all the more in the absence of any scale besides the satellite) and transforming many kinds of artifacts into something that may look like details, as seen below.

PART 2: COLORS AND ATMOSPHERIC DISPERSION

We all know the atmospheric refraction, which changes the apparent altitude of an object above the horizon. Actually, this effect depends on the wavelength: blue rays are move deviated than red ones. The consequence is that colors are spread like a spectrum, as in the image of Venus below. Just like in the Nanosail image above: red on one side, white (or green) in the center and blue on the other side (orientation of colors depend on the orientation of the camera and telescope). This diagram, made by Jean-Pierre Prost, shows that at an altitude of 50°, the spreading across the visible spectrum is already 1.2 arcsec. That is to say, more than the apparent size of Nanosail itself. Thus, any color variation on the sail, even if it could be recorded on the image, would be hidden by dispersion.

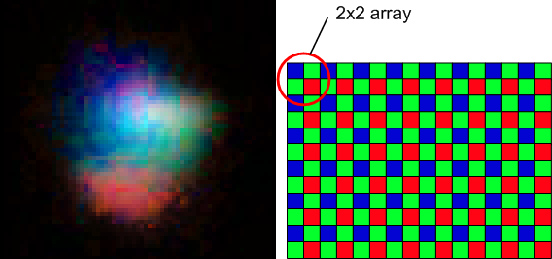

PART 3: COLORS AND BAYER MATRIX

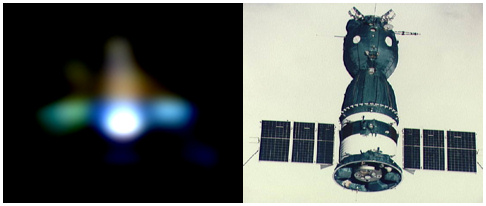

The image above compares the Nanosail "raw" image, just enlarged and color enhanced, and the Bayer matrix of the Sony sensor used in this JVC camcorder. The Bayer matrix pattern is easily recognizable (one row with blue/green, the next one with green/red pixels), as in this image of the star Vega I have taken with a color webcam and that looks like a Soyuz ship, by effect of random turbulence distortion,:

Note about color artifacts: of course, a spreading of colors may also simply come from atmospheric turbulence (we all have experienced the variations of colors of twinkling stars). Chromatism may also come from the optics used: a bottom-of-the-range video camcorder lens behind an eyepiece (eyepieces and lenses commonly show off-axis chromatism). Not to mention noise, unavoidable in a single raw image and that also creates color patches.

PART 4: THE REAL COLOR OF NANOSAIL

The claims accompanying the images above said that the color patches are caused either by the decomposition of the sunlight into different colors (as a prism), or come from the colors of the Earth reflected by the sail. On the image below, the blue color is attributed to the Earth.

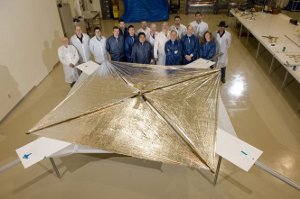

But what color is able to show Nanosail? The sail is a thin transparent polymer comparable to food plastic film, with a layer of aluminum. According to a NASA source who has contacted me, its specular reflection rate is very high, i.e. the white solar light is reflected in white without colors, like does a simple sheet of aluminum. In other words, the sail is colorless and takes the color of the light source, as shown in the image below.

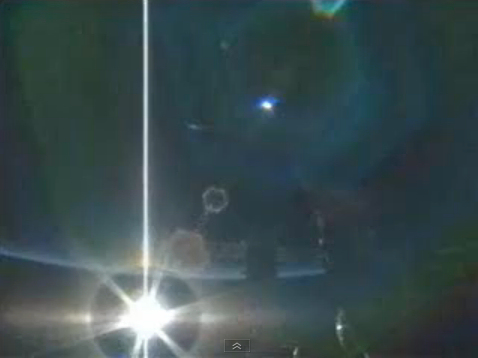

What about a global blue color? During

this passage, the satellite saw the Earth at night. Geometrical

calculations show that, from Nanosail, the Sun was 5° above the surface of the

Earth (3° above the upper atmosphere), and that there was only a thin arc of

the Earth visible, just under the Sun. The maximum thickness of this arc was

about 1°. The configuration is very comparable to the image below, showing

sunset from the ISS. As one can see, the quantity of light coming from the thin

arc is completely negligible with regard to the direct sunlight that heavily

saturates the sensor (other lights in the image are reflections in the camera

lens).

All these elements confirm that the colors shown on these Nanosail images are not real. Even a global blue color is questionable since it cannot be physically explained, on the other hand it can easily come from a slight change in the adjustment of the color balance of the camcorder (whose sensor is not calibrated as can be a CCD camera for astronomy). Actually nothing proves that the colors the camera delivers are reliable. I’ve found a test of this camera on Internet where they explain that in high lighting, the colors are nice but in low lighting, they become poor. Just take a bottom-of-the-range compact camera, take a picture of a scene with objects of different colors in low lighting, and check if all colors are totally realistic without any drift to red, blue, magenta, yellow or any other color. You may have surprises…Even users of high-end DSLRs sometimes complain on forums about color drifts. And all of us who have taken deep-sky color images, even with calibrated filters and sensors, know the difficulties to obtain realistic colors.

PART 5: PROCESSING A SINGLE RAW IMAGE

All the images of Nanosail published

are issued from a single raw frame, no combination of several images has

been performed. All planetary images practice image combination, they know that

a single image is not reliable and that nothing can be deduced from it about

very small details:

- atmospheric turbulence distorts the image randomly, as shown on the example of

Vega above

- a single raw image contains noise and compression artifacts, especially if it

comes from a DV 8-bit compressed video sequence with a sensor having very small

pixels (3.2 microns only), as for these images.

Moreover, comes up the question of

choice of the image to be processed. Amongst thousands images in the video

sequence, one can always find one or several images that looks pleasant to his

eyes and that may look like a satellite. But as we have absolutely no data or

reference image that could help us to know the real shape and silhouette of the

satellite at the moment of shooting, the degree of freedom is almost infinite.

Considering that turbulence distortions can create complex shapes with

prominences and sharp angles, how do we know that the image we have chosen is

realistic and not only pleasant? Thus the reasoning that consists in:

- choosing an image arbitrarily in the video sequence

- considering that the silhouette of the satellite is the one shown by the image

- deducing that the image is realistic

is a logical nonsense.

PART 6: ABOUT MANUAL TRACKING

A simple calculation indicates that the camcorder in this configuration covers a field of about 3 arcmin on the sky, only about 4 times the apparent diameter of Jupiter. Now, install a Newtonian telescope on an EQ5 German mount, take the tube in your arms, look through the 6x viewfinder and try to keep in the field of the camera an object transiting at 0.5 to 1.5 degree per second in the sky. Note that through the 6x viewfinder, the field of view of the camera represents an apparent field of 6x3 = 18 arcmin, about half the lunar diameter as seen with the naked eye. Also note that at 1 degree per second, the satellite crosses the field of the camera in only 1/20 second if the telescope is still.

Another way to look at these figures is to calculate the movement the optical tube corresponding to the field of view of the camera: a field of 3 arcmin corresponds to a lateral movement of 1mm (0.04”) of the top of the tube with regard to its bottom. So if you want to keep the satellite in the field, you have to drive the tube by hand at a precision better than plus or minus 0.02” around the satellite trajectory, of course on both axis (RA and Dec) at the same time.

The unavoidable consequence is that, on the frames where the satellite is present, its movement can be extremely fast because of manual tracking, adding a cause of blur and distortion. In addition, the chances to have the satellite close to the edge of a frame instead of in the center is very high, adding possible off-axis aberrations coming from the eyepiece and the lens, especially coma and astigmatism (those aberrations being able to make more or less elongated or rectangular shapes).

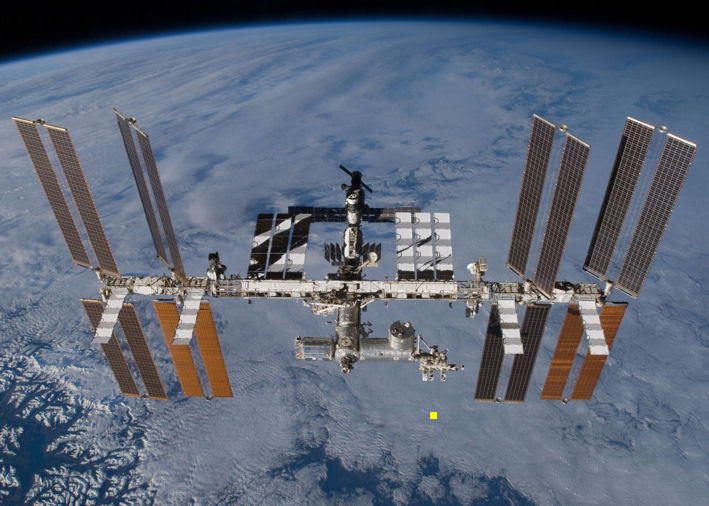

PART 7: CONSISTENCY OF RESOLUTION

The sail dimensions are 3x3 metres (10x10 feet). At a distance of 740km, this corresponds to an object of 1.5 m (5 feet) at the distance of the ISS during a zenithal passage. On the following image of the ISS, I have represented this dimension as a yellow square just under the ISS:

This size is smaller than the size of the EF (Exposed Facility) boxy modules at the end of the Kibo lab (just above the yellow square). And yet none of Ralf Vandebergh's ISS images show each of these modules with their shapes, or even simply separate them distinctly.

All the evidences above demonstrate that the "details" of these Nanosail images are not real and are even physically impossible to record in these conditions (equipment used, shooting and processing methods), like trying to measure the width of a hair with a common ruler. They are artifacts:

- the colors come from a combination of different causes: noise, Bayer matrix, atmospheric turbulence and dispersion, optical chromatism and/or color balance adjustment,

- the size and shape are caused by spreading and distortion due to atmospheric turbulence and are not related to the real size and shape of the satellite.

More generally, recording details on an object whose apparent size is smaller than the main satellites of Jupiter (Io, Europa, Ganymede, Callisto) on a single raw image is a performance that has never been achieved even by the best planetary imager, Damian Peach. Even with a bigger telescope (14") and high-end astronomical cameras delivering uncompressed 16-bit images on a steady target, combination of images is mandatory.